By: erynn young

@lgosp3@k: C0mmun!c@t!0n H@ck!ng on T1kT0k

Introduction

On TikTok, a platform notorious for algorithmic content moderation1, there is an emerging phenomenon of strategic communication hacking, called algospeak for algorithm speak. Algospeak provides users with a seemingly limitless yield of communication hacks in response to content surveillance and policing. Algospeak is situated in a specific digital, interactive context (TikTok), where appropriate content is defined through mobilisations of politics of politeness (e.g. no hate speech), neoliberal sociopolitical ideals (e.g. no racist ideologies), and existing sociocultural hierarchies of marginalisation (e.g. moderation of sexual content).2 Through the enforcement of its community guidelines, TikTok establishes a bounded system that targets inappropriate users/communication for censorship via algorithmic content moderation. Algospeak communication hacking enables TikTok users to subvert these interactive constraints; it disrupts this system.

Guidelines = Discourse = System

TikTok is a multimodal social media platform, providing users with audio, visual, and written communication channels, expanding opportunities for interaction and content generation. All platform interactions are moderated, predominantly through automated/algorithmic content moderation systems; TikTok remains opaque regarding specific operational details.3 Users are instead provided with a collection of community guidelines that circumscribe TikTok’s dos and don’ts (mostly don’ts): this is TikTok’s proclaimed attempt to foster an inclusive, welcoming, and safe social space. Do: treat fellow users respectfully. Don’t: incite violence. Don’t: depict sexually explicit activities. Do: express yourself. Don’t: discriminate based on religion, race, gender identity, etc. Don’t: harass others.

Users must comply with these guidelines or risk having their content removed and their accounts banned. The algorithmic content moderation systems that enable TikTok to constantly surveil its users contribute to users’ maintenance of the pervasive fear of censorship (e.g. studies investigating behaviour and communication chilling/silencing effects of surveillance).4 Despite the consequences of content violations, TikTok’s community guidelines are not explicit in how inappropriate content is made manifest in users’ communication practices. Many guidelines indicate that TikTok does not allow X, Y, or Z, but do not elaborate on which words are violative. Users must assess their own communicative content within TikTok’s systemic context and its definitions of inappropriate content, correcting when necessary, to avoid algorithmic scrutiny. Algorithmic content moderation thus coerces users into anticipatorily self-censoring.

TikTok defines its ‘safe’ space through a bricolage of sociocultural, political, and legal values, ideologies, and conventions. It establishes the rather vague boundaries of this system (or discourse, in the Foucauldian sense)5 through content guidelines and (re)definitions of what it means to be appropriate and eligible for TikTok audiences predominantly through language censorship.6 Survival within TikTok’s system translates to platform access (i.e. non-banned accounts) and visibility (i.e. content distribution/circulation). This survival is predicated on a confluence of factors. First, users must successfully internalise TikTok’s community guidelines; they must translate what these guidelines mean in terms of restricting particular language use and put these understandings into practice. Users must also develop (and reference) perceptions of how extensive they believe TikTok’s algorithmic surveillance capabilities to be – termed users’ algorithmic imaginaries.7 These imaginaries drive varying defensive digital practices, including severe self-censorship or speech chilling8 if algorithmic surveillance is perceived/believed to be comprehensive. Users mobilise these perceptions/beliefs through subsequent self-moderation9 and communication hacking. Algospeak, a medley of (written, video, audio) communication manipulation strategies, is one such defensive digital practice, protecting users within TikTok’s surveilled system by providing them with creative, innovative, and crucially, adaptive means of (communication) resistance.

Hacking Strategies

Developing research into algospeak on TikTok has illuminated numerous possibilities for communication subterfuge that algospeak users exploit in challenging TikTok surveillance, culminating in a rich toolkit of communication hacking strategies.10 Algospeak-as-hacking is a perpetually ongoing process of system disruption by which users take back some expressive agency. The informing research underlines algospeak strategies themselves as tools for successful and sustainable communication hacking rather than specific algospeak forms that might be considered as constitutive of a coded language. This is because the boundaries of TikTok’s system can and do shift – for example, their content moderation capabilities adapt – and users are better prepared to respond to the system’s fluidity with equally fluid hacking strategies rather than lasting (and therefore more moderate-able) words/forms. The algospeak strategies11 below demonstrate a broad range of users’ approaches to communication hacking.

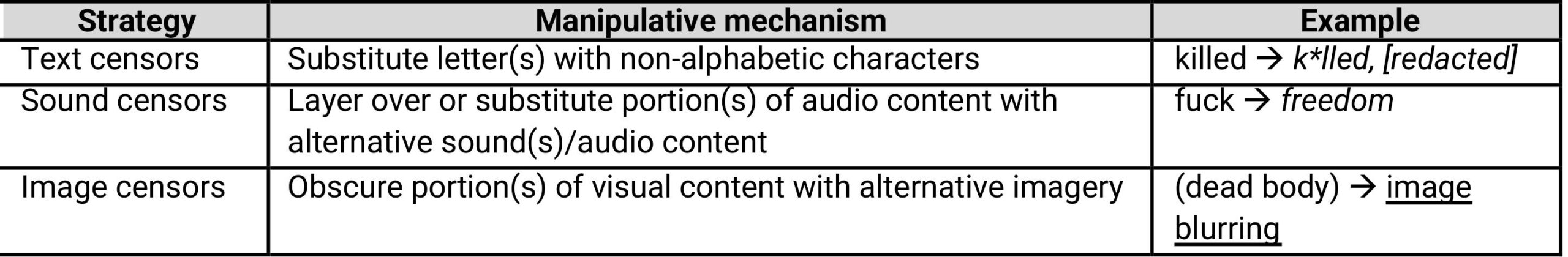

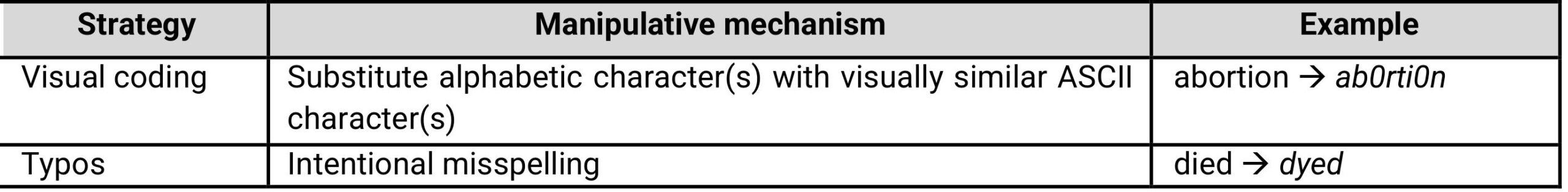

Censoring obscures content through strategic content substitution; text, sound, or image censors disrupt content filters that are triggered by linguistically meaningful words and recognisable imagery.

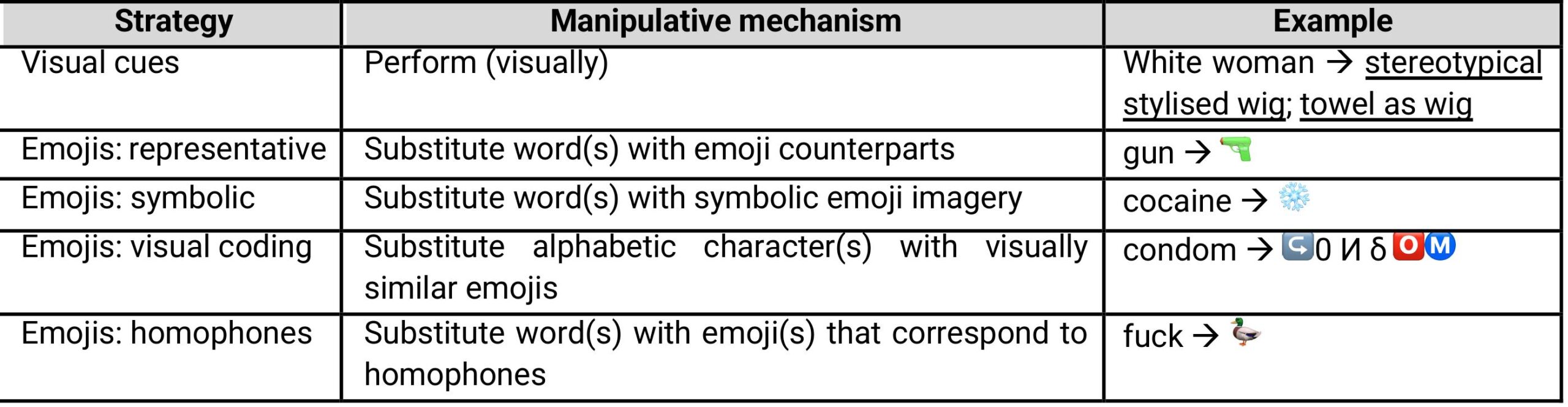

Users can also manipulate letters/characters within words or phrases. Visual coding (also l33tspeak12, hackers’ language,13 rebus writing14) and typos disguise word forms while maintaining intelligibility through users’ abilities to treat non-alphabetic characters as if they were alphabetic. Users can convey and understand meaning through these hacked spellings by making meaning where it does not exist; automated word-detecting filters cannot.

Visual cues exploit recognisable cultural themes/artefacts for audience comprehension but can also encode meaning through the intertextuality or memetic culture of digital communication.15

Emojis exploit the fuzzy boundaries between visual and written channels. Emoji use can be representative (or literal); it can also integrate implied phonetic (linguistic) material, as in the case of the emoji homophone 🦆 for ‘fuck.’ Users’ digital literacies regarding evolving emoji meanings also play a substantial role in subversive emoji communication.

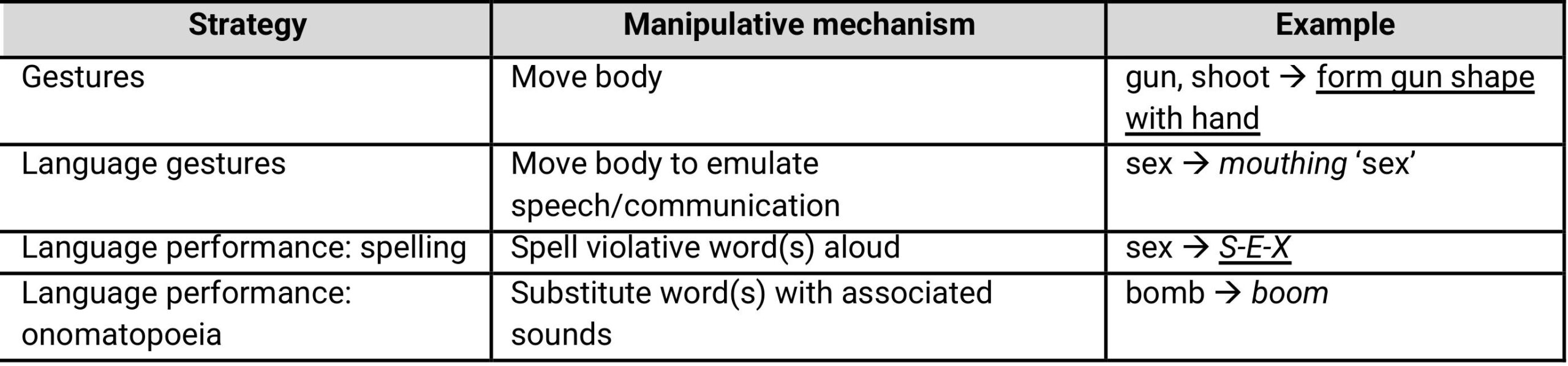

Users use visual gestures to convey sensitive content without triggering text or sound filters.

Language gestures and performances skirt the boundaries between gesture/performance and speech by strategically removing or manipulating parts of conventional communication; this includes withholding audio content (e.g. mouthing), separating a word form (e.g. spelling), and using associative sounds to indicate particular words (e.g. onomatopoeia).

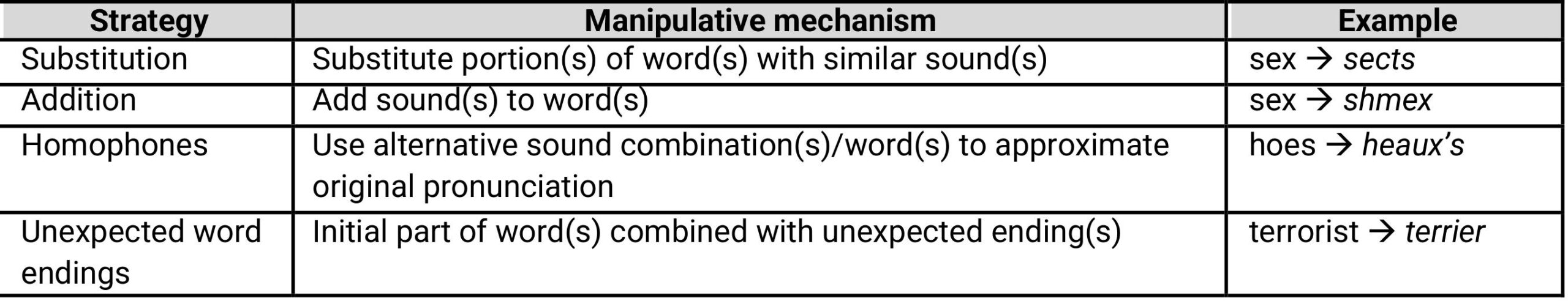

Users sometimes change the phonetic makeup of their communication while maintaining intelligibility, mostly by relying on similar sounds to keep the intended meanings retrievable for audiences.

sects, a phonetic substitution of ‘sex,’ substitutes -X with -CTS, which achieves a similar pronunciation. Adding consonants -HM before the vowel – a variation of shm-reduplication from Yiddish16 – results in a minimal difference: /seks/ becomes /ʃmeks/. Heaux’s,17 a homophone, is identical in pronunciation to its target word ‘hoes’: /hoʊz/ remains /hoʊz/. This approach to communication hacking can be extended to unexpected word endings, which substitutes new word endings to potentially violative words and relies on context to convey the intended meaning.

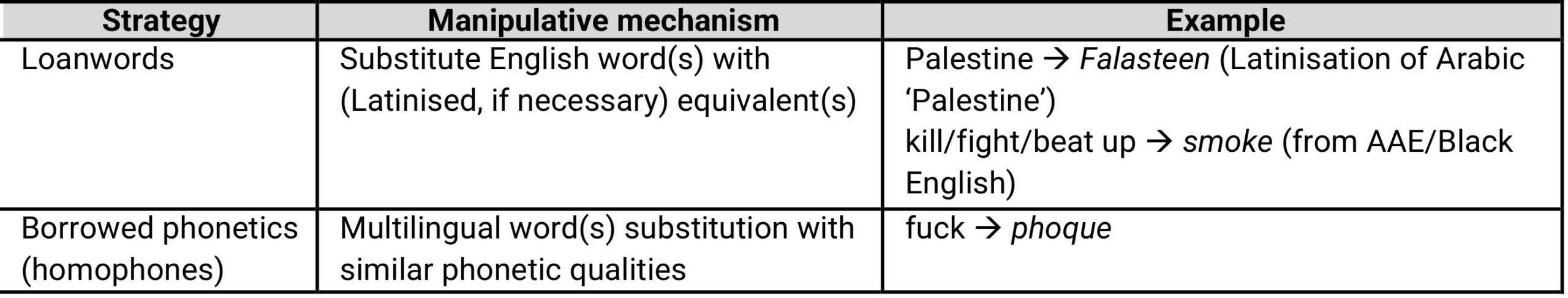

Multilingual/-dialectal creativity reveals how TikTok’s system boundaries are conceptualised by users – what is and is not considered at risk of moderation – and how these boundaries are transgressed by users in their English interactions.

Users highlight how inappropriate content is socially/culturally/etc. specific. In other words, a word’s inappropriateness shifts depending on the language variety in which it is communicated and local social/cultural customs, norms, and conventions. Users can manipulate either one of these aspects by integrating multiple language varieties. Loanwords for (English) algospeak functions like typical loanword use; a word is borrowed from another language variety and integrated into English language content. The loanword’s non-Englishness becomes subversive, helping users evade filters meant to track locally (i.e. English) violative words. Users borrow sounds (phonetics) from other language varieties, using multilingual homophones like phoque (‘seal’ in French) instead of ‘fuck.’ Phonetic similarities facilitate audience comprehension, and borrowed phonetics exploit the lack of situated (English) inappropriateness; phoque is not violative in French.

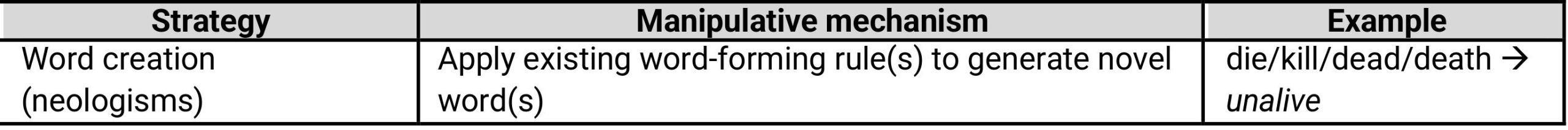

Algospeak word creation has garnered considerable attention on and beyond TikTok,18 owing in part to the novelty of such neologisms as well as their seeming emblematic of algospeak as a coded language.

This strategy follows existing rules for word creation; for example, unalive is created by adding a negating prefix (e.g. UN-) to an adjective (e.g. ALIVE) to convey meanings regarding death or killing. The resulting word is unconventional but shares semantic qualities (i.e. literal meaning) with the word(s) it replaces. Unalive’s accordance with word creation conventions and its use of a binary opposition to convey a familiar concept facilitates audience comprehension while its unconventionality disrupts the system’s expectations.

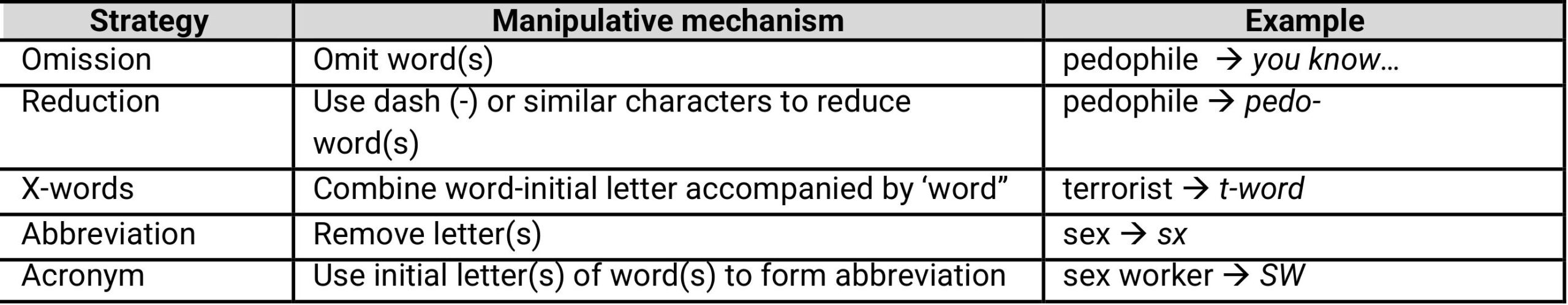

Removing part(s) of users’ communication – from vowels to entire words – is also an effective form of algospeak.

These strategies rely on context for communicative success; audiences must fill in the blanks with contextual knowledge they gather throughout the interaction. Users’ heightened sensitivities to communication and content on TikTok – driven by the centrality of TikTok’s content guidelines and moderation – contribute to the success of such partial language as hacked communication by encouraging users to anticipate implicit messaging.

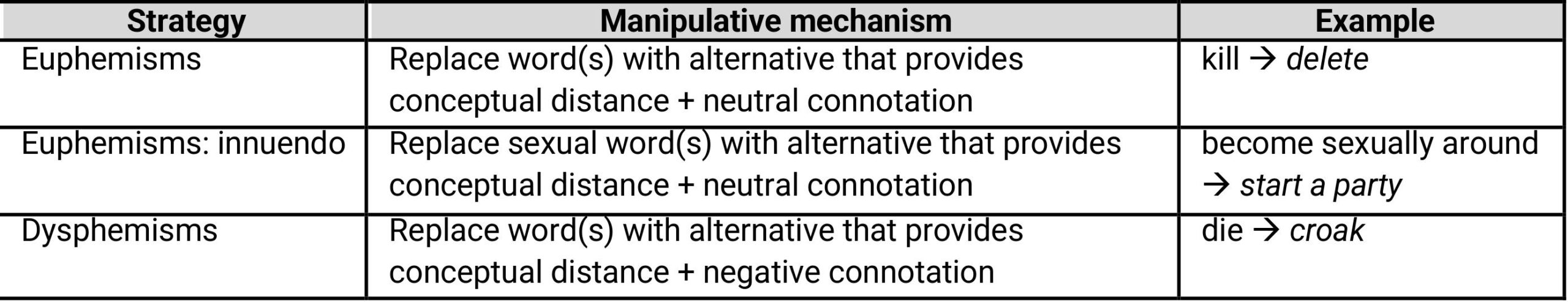

Algospeak euphemisms and dysphemisms are alternative words/expressions for at-risk communication that also reduces or increases negative connotations, respectively. One subset of euphemisms frequently used for hacking is the innuendo – owing to TikTok’s conspicuous moderation of sexual content.19

Conventional euphemisms, innuendos, and dysphemisms rely on broad, cultural intelligibility while non-conventional alternatives require audiences to access context and cues to key into the intended meaning.

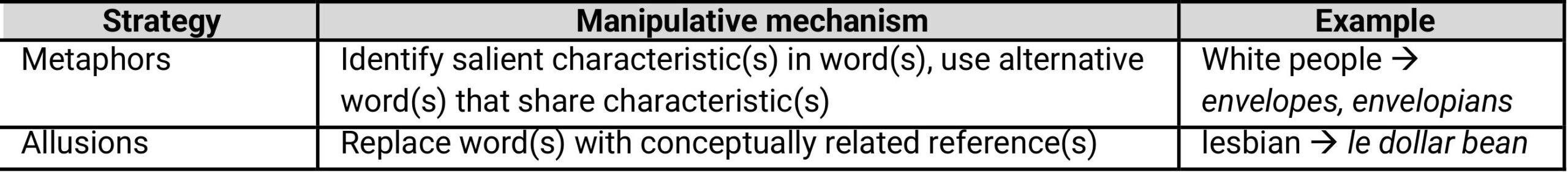

Algospeak metaphors involve mapping potentially inappropriate concepts onto new words/expressions that share some common threads of meaning or symbolism so audiences can figure out what is being communicated.

Metaphors, as well as allusions, retain one or more salient characteristic(s) of the original word or concept. In envelopes/envelopians for ‘White people,’ the salient characteristic is whiteness. This enables audiences to make inferences based on conceptual continuity between the intended meaning and the algospeak form. The eggplant emoji 🍆, used metaphorically on and off TikTok for ‘penis,’ illustrates the role of conceptual continuity in comprehending hacked communication. This continuity can rely on cultural artefacts (e.g. memes20) to ensure audience comprehension, like the meme-ification of algospeak itself (e.g. le dollar bean’s alluding to le$bian/le$bean21).

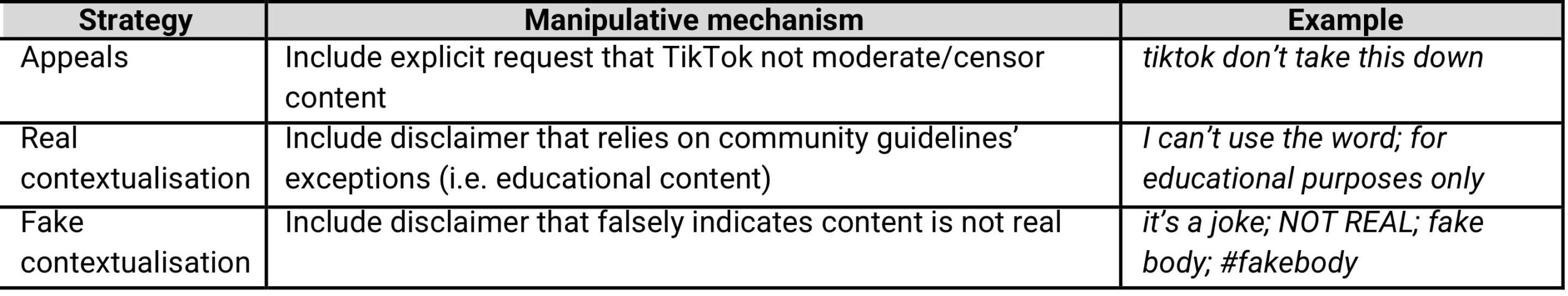

Users’ contextualisation attempts show direct, dialogic engagement with TikTok at the system level. Users attempt to negotiate with (i.e. appeals, real contextualisation) or challenge (i.e. fake contextualisation) TikTok’s content evaluation and moderation practices. These strategies function in tandem with the potentially violative content, which remains uncensored.

These strategies represent a continuum of users’ willingness to be more or less subversive. On one end of the continuum (i.e. appeals, authentic disclaimers), users directly confront the potential inappropriateness of their content. On the other end (i.e. inauthentic disclaimers), users are deceptive about the nature of their content, following the internal logic that content that is not real cannot be a real violation.22

Discussion & Conclusion

Algospeak is a process of communication hacking that has developed in the face of TikTok’s algorithmic content moderation systems, which threaten users’ platform access and visibility, as well as their communicative agency. The manipulative strategies that comprise the algospeak toolkit for subversive communication enable users to hack their lines of code to achieve their goals: literally altering segments of (language) code to embed, disguise, imply, hint, and covertly convey their intended meanings. These strategies reach across communicative channels (i.e. written, audio, visual), media types (i.e. cultural allusions), geographies and languages varieties, cultures, and time (i.e. 90’s l33tspeak) to helps users keep their communication safe from moderation, remain selectively comprehensible among intended audiences, and embrace creativity and adaptability. Algospeak strategies provide users with rich and diverse ways to hack their communication and manage and restrict comprehensibility, allowing them to survive within TikTok’s algorithmic surveillance state and remain visible to fellow users. These seemingly paradoxical functions of algospeak – self-censorship or self-moderation to expand content reach – mirror the double-edged nature of TikTok’s algorithmic capabilities. TikTok’s algorithms moderate and remove undesirable content; they also promote desirable content to broader audiences, expanding content visibility (e.g. viral videos). With communication hacking, users can simultaneously keep their content safe from algorithmic scrutiny and eligible for mass, algorithmic distribution. Algospeak is simultaneously communication hacking to avoid algorithms and to benefit from algorithms. Thus, algospeak – or algorithm speak – is speaking for and against algorithms.

It is crucial, however, to reckon with the implications of algospeak as a communicative phenomenon, as well as algospeak research and heightened visibility more broadly. First, contending with algospeak in the digital hands of differentially motivated users is necessary. While it is a resourceful collection of language hacks that help protect users from having their communication unfairly moderated,23 it is also accessible for strategic uses in covertly conveying harmful, discriminatory, violent communication. For example, it is possible to protect hate speech or threatening language – which are both unequivocally in violation of TikTok’s community guidelines – from content moderation through algospeak communication hacking (e.g. racist dog whistles as a kind of metaphor/allusion). Though no instances of such algospeak application were encountered during this research process, hacking is (inevitably) a tool accessible to a diverse range of users.

Additionally, it is also necessary to reconcile the risks associated with maintaining algospeak discourses, among its users online and in other domains including media journalism and academic research. Heightened algospeak visibility can backfire and unintentionally push content moderation systems like those operating on TikTok to adapt, improving moderation capabilities by integrating more complex forms and styles of communication. It is not implausible to anticipate that subversive, algospeak communication is someday soon explicitly targeted for moderation, at which point algospeak use by platform users will invite rather than avoid surveillance and (algorithmic) scrutiny. It is difficult to predict exactly how such evolutions to TikTok’s content moderation practices would come about, if at all. But perhaps some solace can be taken in the historical successes of subversive communication observed: for example, Lubunca, a repertoire of secret slang (an argot) for various queer and sex worker communities in Turkey.24

TikTok surveils, moderates, and controls content and user behaviour through language. And it is through language that users disturb systemic expectations and norms. Users reassert their control, negotiating their communicative and expressive agency by pushing boundaries, manipulating language, and subverting community guidelines. They resist in the face of TikTok’s threats of censorship and deplatforming/banning. They exploit the weaknesses in TikTok’s algorithmic armour by playing across communicative channels: their human and creative communication skills outsmart and outpace current content moderation systems.25 They layer manipulations, blend strategies, juxtapose and converge communication channels: innovate, innovate, innovate. Users are resilient against the imposition of content moderation through algospeak, creating new possibilities for communication, expression, connection, and ensuring digital survival. However, as algorithmic content moderation practices adapt in response – for instance, integrating popular or widely used algospeak communication into content filters – users will need to continue to generate and deploy innovative, increasingly complex and/or creative strategies for communication hacking to stay one step ahead.

Acknowledgements: The author declares no conflict of interest.

BIO

I was born and raised in the United States of America, where I completed the majority of my undergraduate studies (in French language and linguistics). I have recently completed a research master’s in Linguistics and Communication at the University of Amsterdam. I am currently located in and conducting independent research out of Amsterdam, Netherlands. Previous and ongoing research interests include critical (technocultural) discourse analysis, argumentation analysis, communicative and interactive phenomena on digital platforms, deliberate linguistic manipulation as user agency negotiation/resistance, and communication practices language users engage in to represent/perform/negotiate their positionalities/identities.

REFERENCES

- Danielle Blunt, Ariel Wolf, Emily Coombes, & Shanelle Mullin, “Posting Into the Void: Studying the impact of shadowbanning on sex workers and activists,” Hacking//Hustling, 2020; Faithe J. Day, “Are Censorship Algorithms Changing TikTok’s Culture?,” OneZero, December 11, 2021; Taylor Lorenz, “Internet ‘algospeak’ is changing our language in real time, from ‘nip nops’ to ‘le dollar bean’,” The Washington Post, April 8, 2022; Kait Sanchez, “TikTok says the repeat removal of the intersex hashtag was a mistake,” The Verge, June 4, 2021. ↩︎

- Blunt, Wolf, Coombes, & Mullin, 2020. ↩︎

- “Community Guidelines Enforcement Report,” TikTok. https://www.tiktok.com/transparency/nl-nl/community-guidelines-enforcement-2022-4/ (accessed March 2023); “Community Guidelines,” TikTok. https://www.tiktok.com/community-guidelines/en/ (accessed March 2023). ↩︎

- Elvin Ong, “Online Repression and Self-Censorship: Evidence from Southeast Asia,” Government and Opposition, 56 (2021): 141-162; Jonathon W. Penney, “Internet surveillance, regulation, and chilling effects online: a comparative case study,” Internet Policy Review, 6 (2017). ↩︎

- Michel Foucault, The Archaeology of Knowledge and the Discourse on Language (New York: Pantheon Books, 1972). ↩︎

- Tarleton Gillespie, “Do Not Recommend? Reduction as a Form of Content Moderation,” Social Media + Society, 8 (2022). ↩︎

- Taina Bucher, “The algorithmic imaginary: Exploring the ordinary affects of Facebook algorithms,” Information, Communication & Society, 20 (2017): 30-44; Michael A. DeVito, Darren Gergle, & Jeremy Birnholtz, “’Algorithms ruin everything’: #RIPTwitter, folk theories, and resistance to algorithmic change in social media,” Proceedings of the 2017 Conference on Human Factors in Computing Systems (2017): 3163-3174. ↩︎

- Ong, 2021; Penney, 2017. ↩︎

- Nadia Karizat, Dan Delmonaco, Motahhare Eslami & Nazanin Andalibli, “Algorithmic Folk Theories and Identity: How TikTok Users Co-Produce Knowledge of Identity and Engage in Algorithmic Resistance,” Proceedings of the ACM on Human-Computer Interaction, 5 (2021): 1-44; Daniel Klug, Ella Steen, & Kathryn Yurechko, “How Algorithm Awareness Impacts Algospeak Use on TikTok,” WWW ’23: The ACM Web Conference 2023 (2023). ↩︎

- Kendra Calhoun & Alexia Fawcett, “’They Edited Out Her Nip Nops’: Linguistic Innovation as Textual Censorship Avoidance on TikTok,” Language@Internet, 21 (2023); Klug, Steen, & Yurechko, 2023; young, 2023. ↩︎

- In this article, algospeak hacked communication is in italics, its glosses (non-hacked forms) are in ‘single quotes,’ and gestures/performances are underlined. ↩︎

- Blake Sherblom-Woodward, “Hackers, Gamers and Lamers: The Use of l33t in the Computer Sub-Culture” (Master’s thesis), (University of Swarthmore, 2002). ↩︎

- Brenda Danet, Cyberpl@y: Communicating Online (Routledge, 2001). ↩︎

- David Crystal, Internet Linguistics (Routledge, 2011); Ana Deumert, Sociolinguistics and Mobile Communication (Edinburgh University Press: 2014). ↩︎

- Calhoun & Fawcett, 2023. ↩︎

- Andrew Nevins & Bert Vaux, “Metalinguistic, shmetalinguistic: the phonology of shm reduplication,” Proceedings of CLS 39, 2003 (2003). ↩︎

- This form also uses multilingual borrowed phonetics, borrowing eau(x) and its pronunciation from French. ↩︎

- Ellie Botoman, “UNALIVING THE ALGORITHM.” Cursor, 2022; Alexandra S. Levine, “From Camping To Cheese Pizza, ‘Algospeak’ Is Taking Over Social Media,” Forbes, September 19, 2022. ↩︎

- Blunt, Wolf, Coombes, & Mullin, 2020; Mikayla E. Knight, “#SEGGSED: Sex, Safety, and Censorship on TikTok” (Master’s thesis), (San Diego State University, 2022). ↩︎

- Calhoun & Fawcett, 2023. ↩︎

- Ibid. ↩︎

- Charissa Cheong, “The phrase ‘fake body’ is spreading on TikTok as users think it tricks the app into allowing semi-nude videos,” Insider, February, 8, 2022. ↩︎

- Blunt, Wolf, Coombes, & Mullin, 2020; Calhoun & Fawcett, 2023. ↩︎

- Nicholas Kontovas, “Lubunca: The Historical Development of Istanbul’s Queer Slang and a Social-Functional Approach to Diachronic Processes in Language” (Master’s thesis), (Indiana University, 2012). ↩︎

- Day, 2021; Robert Gorwa, Reuben Binns, & Christian Katzenbach, “Algorithmic content moderation: Technical and political governance,“ Big Data & Society, 7 (2020). ↩︎